Loading Multiple JSON Files Into Different Tables Using AZURE DATA FACTORY

TASK:

Loading Multiple JSON Files Into Azure SQL Using AZURE DATA FACTORY

SOLUTION:

In Azure first we need load the given files into blob ,after that we need to create a pipeline .

The following will show you that entire pipe line diagram.Here I have used several activities namely GETMETA DATA, FILTERS,FOREACH,COPY ACTIVITY, STORED PROCEDURES etc.

GETMETA DATA à it will give different properties of data , and it will give acknowledgement for loaded multiple files.

FILTERS à Filters will used to filter the files based on the given requirements like ‘File starting name’, ‘file ending name’ and some string functions etc.

FOR EACH à For each activity is loop the process means if we need to do same operation more than one time at that time we will use FOR EACH Activity.

COPY ACTIVITY à Copy Activity will be used to copy the files from one location to another location .

STORED PROCEDURES à STORED PROCEDURES activity is used for call the STORED PROCEDURES from AZURE SQL.

These five Activities are used in these task

STEP 1:

1) ADD GETMETA DATA activity into the pipeline

2) Select or create DATA SET for GETMETA DATA

3) In data set source will be folder which consist of the json format files

![]()

![]()

![]()

4) ![]()

![]() The above figure consist of FILED LIST à here we need to add new ARGUMENT and select CHILD ITEMS means the child items nothing but files.

The above figure consist of FILED LIST à here we need to add new ARGUMENT and select CHILD ITEMS means the child items nothing but files.

5)The following diagram specifies the file path and linked service .

STEP 2:

FILTERING FILES :

Up to now I have loaded all the files through GETMETA DATA Activity now I have divided the files based on the names by using FILTER ACTIVITY.

1) As my task I need to load 5 files where are 2 files are same and 3 files are different so that I have used 4 filers in this task.

2) Each filter will divide the files based on the starting names.

3) In filter setting there are two requirements is there are ITEMS,CONDITION

Items à here we need to ADD the Dynamic content here I have added “ “@activity(‘getmetadata1’).output.childitems. Means that the filter activity takes output of the get metadata.

I have given condition as “startswith(item().name,’employee’)”

This condition will get the file which file starting with name of employee.

STEP 3:

FOR LOOP ACTIVITY :

I have used for loop activity because if in case the files count will be more than one we need to automate the process so that I have used for loop.

In for each

1) àclick on settingsà click on ITEMS à click on ADD DYNAMIC CONTENTàdrag the ACTIVITY OUTPUTSàselect the filter which you have given connection t the for each , Hence the content will be added as shown the picture.

2) Click on ACTIVITIES in foreach , here we need to the activities which we need , AS per my requirement I have added 3 activities one copy activity and two Stored procedures.

COPY ACTIVITY :

IN COPY ACTIVITY :

IN COPY ACTIVITY :

1)Source :

DATA SET which you have given for ‘GET METADATA’ here we need give wildcard file path à enable wildcard file pathàclick on file name à add dynamic contentà select for each which we are creating activities à it will give à@item()à I had added ‘.name’ as a extenstionà finally the file name is “ item( ).name ” as shown in picture.

2)Sink :

3)MAPPING :

After sink click on mapping click on import schema , it will ask for the value ,in value give the “filename” which you filtered. I have given the name as à ‘‘employee.json”

After getting schema we need map type of the column ,some times we need to add column names also.

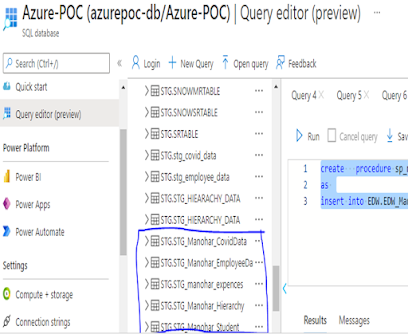

4)Stored Procedures :

After that I have used Stored Procedures , Before that we need to create the stored procedure .

Why Stored Procedures ?

In requirement initially the data should be loaded into Stage layer after that we need load the data into DET (Data Element Type) à after that we need to load the data into EDW (Enterprise Data Warehouse).

In DET I have performed several transformations as per requirement.

Stored Procedures 1: (STG to DET)

create procedure sp_mR_employdata_proc1

as

insert into DET.DET_Manohar_EmployeeData

SELECT

src.[id] ,

src.[name],

src.[email] ,

src.[password] ,

src.[about] ,

src.[token] ,

src.[country] ,

src.[location] ,

src.[lng] ,

src.[lat] ,

src.[dob] ,

src.[gender] ,

src.[userType] ,

src.[userStatus] ,

src.[profilePicture] ,

src.[coverPicture] ,

src.[enablefollowme] ,

src.[sendmenotifications] ,

src.[sendTextmessages] ,

src.[enabletagging] ,

src.[createdAt] ,

src.[updatedAt] ,

src.[livelng] ,

src.[livelat] ,

src.[liveLocation] ,

src.[creditBalance] ,

src.[myCash],

(src.[name]+'-'+src.[email]+'-'+src.[password])AS DETAILS

from STG.STG_Manohar_EmployeeData as src

left join

DET.DET_Manohar_EmployeeData tgt

on

src.[id]=tgt.[id] where tgt.[id] is null

Stored Procedures 2: (DET to EDW)

create procedure sp_mR_employdata_proc2

as

insert into EDW.EDW_Manohar_EmployeeData select * from DET.DET_Manohar_EmployeeData

*****Therefore For all activities I have done as same as above .*****

Stored Procedures 1 and 2 for Hierarchy

create procedure sp_mR_Hierarchy_proc1

as

insert into DET.DET_Manohar_Hierarchy

SELECT

src.[feeds_id],

src.[title],

src.[feeds_description],

src.[location],

src.[lng],

src.[lat],

src.[userId] ,

src.[feeds_name],

src.[isdeleted],

src.[profilePicture] ,

src.[videoUrl] ,

src.[images],

src.[feeds_mediatype] ,

src.[imagePaths],

src.[feedsComment],

src.[commentCount],

src.[feeds_multiMedia_id],

src.[feeds_multiMedia_name],

src.[feeds_multiMedia_description],

src.[url] ,

src.[feeds_multiMedia_mediatype] ,

src.[likeCount] ,

src.[place],

src.[createAt],

src.[likes],

src.[dislikes] ,

src.[userAction] ,

src.[createdAt] ,

src.[code],

src.[msg] ,

src.[totalFeed]

from STG.STG_HIERARCHY_DATA as src

left join

DET.DET_Manohar_Hierarchy tgt

on

src.[feeds_id]=tgt.[feeds_id] where tgt.[feeds_id] is null

----------------------------------------sp-2---------------------------------------------

create procedure sp_mr_hierachy

as

insert into EDW.EDW_Manohar_Hierarchy select * from DET.DET_Manohar_Hierarchy

Stored Procedures 1 and 2 for covid data

CREATE procedure sp_manohar_covid

as

insert into DET.DET_manohar_coviddata

SELECT src.[Sno],

src.[Date],

src.[Time],

src.[State/UnionTerritory],

src.[ConfirmedIndianNational],

src.[ConfirmedForeignNational],

src.[Cured],

src.[Deaths],

src.[Confirmed],

substring(src.[Date], 9, 2) + '-' + substring(src.[Date], 6, 2) + '-' + substring(src.[Date], 1, 4)as converteddate,

(substring(src.[Date], 9, 2) + '-' + substring(src.[Date], 6, 2) + '-' + substring(src.[Date], 1, 4)+'--'+src.[Time])as Date_time

from STG.STG_Manohar_CovidData as src

left join

DET.DET_manohar_coviddata tgt

on

src.[Sno]=tgt.[Sno] where tgt.[Sno] is null

---------------------------------------------sp-2--------------------------------------------------create procedure sp_edw_manohar_covid

as

insert into EDW.EDW_manohar_coviddata select * from DET.DET_manohar_coviddata

Stored Procedures 1 and 2 for Student Data

create procedure sp_mr_studentdata_proc1

as

insert into DET.DET_manohar_studentdata

SELECT

src.[ID] ,

src.[LastName] ,

src.[FirstName] ,

src.[City] ,

src.[State] ,

src.[Gender] ,

src.[StudentStatus] ,

src.[Major] ,

src.[Country] ,

src.[Age] ,

src.[SAT] ,

src.[Grade] ,

src.[Height] ,

(src.[FirstName] +' '+src.[LastName])as full_name

from STG.STG_Manohar_Student as src

left join

DET.DET_manohar_studentdata tgt

on

src.[ID]=tgt.[ID] where tgt.[ID] is null

------------------------------sp-2----------------------------------------------------------------------

create procedure sp_mr_studentdata_proc2

as

insert into EDW.edw_student_data select* from DET.DET_manohar_studentdata

Archive json files:

|

| Saving source files |

1) Finally I have saved source files into another file as per requirement .

2) For archiving files I have used one FOREACH in for each activity I have used one COPY ACTIVITY.

3) In Copy Activity source is data set what I have given in GETMETA DATA . and sink is the new folder in storage account.

DELETING THE SOURCE FILES :

1)As per requirement I need to delete the source file so that I have used DELETE Activity.

2)Data set is same as given for GETMETA DATA activity.

|

| Deleting source files |

Here I have created one linked service which represent the source files

In FOLDER PATH I have given the path of source files.

STAGE TABLES IN AZURE SQL:

DET TABLES IN AZURE SQL:

EDW TABLES IN AZURE SQL:

OUTPUTS:

Comments

Post a Comment